Adventure Scientists: Final Delivery

Posted November 30, 2022 ‐ 6 min read

One step closer to field biology at scale

This blog post describes the last four months of the Adventure Scientists Project - to read about the first part of the project, check out the blog post on the MVP Launch.

In early November, we delivered the final set of features for Adventure Scientists on their (soon to be launched) platform. It’s been a joy for us to get to work with this group of motivated, passionate, and brilliant folks, and it’s thrilling to see this (in our mutual opinion) excellent product enter the world, and make a difference for field biology.

In this post, I wanted to describe what the last four months of work have looked like on this project, and draw some lessons from this work. In the last few months, I’d estimate our work for Adventure Scientists fell approximately along these lines:

- 40%: tweaking existing features based on user feedback

- 30%: building new features to meet product specifications

- 10%: site reliability, performance and accessibility tweaks

- 10%: fixing bugs

- 10%: nailing down minutia in the specifications

Constant Feedback and Iteration

From the beginning of this project, we knew that a constant stream of user feedback was going to be critical to its success. The team at Adventure Scientists bought into this idea, and were fabulous partners - routinely getting their volunteers, scientists, and staff to try out the platform and its specific features, and leaving detailed ideas, suggestions, and questions.

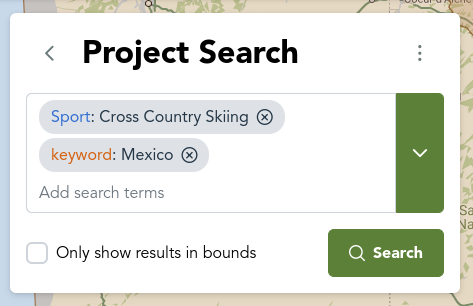

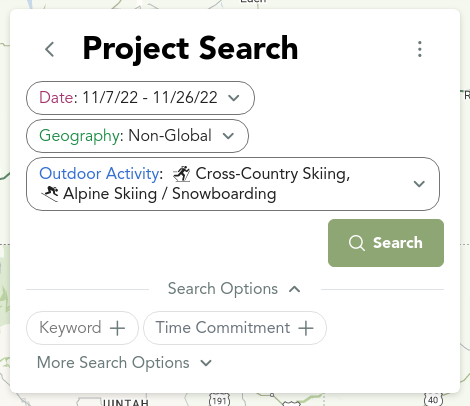

By my (albeit approximate) calculation, we made a concrete change to the platform 86% of the time in response to user feedback. Some of these tweaks were small, some were structural. A great example is how the search box changed over time. Through a series of feature ideas, complaints about screen size, and watching folks use the search features, we tried out a bunch of different ways of representing search information, and ultimately got it to a place where it is much much better.

This is obviously just one example, but it’s one of hundreds of ways in which this project is much better off because of the feedback and brainstorming of potential users.

- A staff-person’s questions about iconography made us associate labels and tooltips on every button.

- A scientist’s questions about terminology led to a site-wide rebrand to use the same exact terms in every place.

- A retiree’s frustration using the site on a 6 year old smartphone made us redesign many of our components for tiny mobile devices.

The fact that this product is so much better off today than it was then is a really good reminder of how critical a diversity of perspectives in product design and testing, and how valuable it is to get the product in front of real users.

New Features

There are too many features that we built in this time period to list, so I’ll let the final product do the talking when it launches in the next few months. Instead, there is one trend in building these features that is are worth highlighting.

The time from when we started development to the launch of the MVP was about the same amount of time between the MVP and final delivery, despite the fact that many of the features in the MVP were iterated on, and the features launched after the MVP represent about 80% of the functionality of the site.

This is notable because it shows the difference between the two phases of the work. In the pre-MVP period we were validating the technology, establishing patterns, and building only the barebones functionality. But once that was done, actually building the full feature set for the site was comparatively quick, despite it comprising significantly more code, and significantly more business logic. This is a nice example of the Pareto Principle, and something we’ll keep in mind for future project planning.

The Quiet Stuff that Matters

We endeavored from the beginning to build a site that would meet Adventure Scientists needs today and into a future of expansion and growth. There were three main things we worked on in this space which might not be obvious to a visitor, but are critical for the longevity and health of the site.

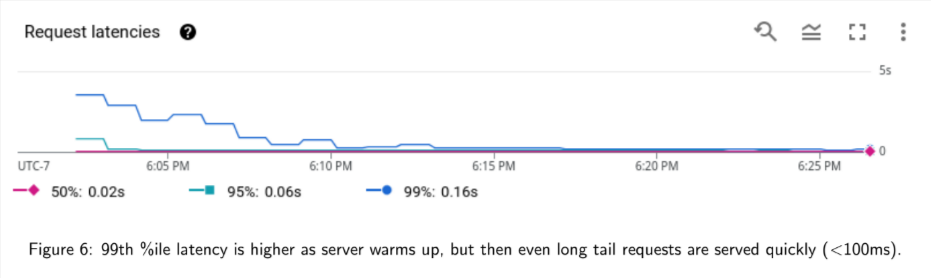

- Performance and Load Testing: we spent quite a bit of time making sure that the site can scale up to Adventure Scientists’ best-possible projected use. This involved iteratively tweaking the code to be more efficient in terms of cost, time, and bandwidth.

- Offline: we used the excellent tooling provided by the growing Nuxt3 and PWA community to build up a robust offline experience, while dramatically reducing the overall size of the site - enabling it to load quickly and work reliably over poor internet connections.

- Accessibility: we believe strongly that the internet is for everyone, and we made a number of subtle (and often invisible) changes to the site to achieve Accessibility standards.

Bugs

It happens. Writing code means writing wrangling bugs.

![An XKCD comic: [Cueball sits at a computer, staring at the screen and rubbing his chin in thought. A friend stands behind him.] Cueball: Weird — My code's crashing when given pre-1970 dates. Friend [pointing at Cueball and his computer]: Epoch fail!](/blog/adventure-scientists-final-delivery/images/xkcd-bug.png)

We settled on an approach to bugs that tried to minimize the impact of them on our clients. By having separate development and production environments (pushing to development only after we vetted code for ourselves, and pushing to prod only after temporal and external vetting), we were able to express different levels of certainty about our code, and allow quick iteration speed while not having any regressions discovered in prod.

Over the course of development we made 58 changes to the database schema, made 11 sets of feature releases to Dev, and 7 to Prod. None ever had to be rolled back in production.

Nitty Gritty Specifications

No matter how long of a specifications document you write, during implementation there are going to be areas of uncertainty that you need to resolve with the client. Thankfully for us, Adventure Scientists provided us with a consistent point of contact on the project, so that we could send them questions about which competing alternative they wanted, or sit down to talk through the options with us. Though most of the specifications that we’d co-written with the client prior to implementation ended up being used, several of them were modified, tweaked, or discarded when prior theory met implementation reality.

This emphasizes one of the most critical lessons from this project for our organization: close collaboration, partnership, and frequent communication between developer and client are the core of what made this project successful, and what we’ll seek to emulate in the future. We want to deeply thank Adventure Scientists for being the fantastic partner for us on this journey.

We also share their excitement to bring this product to the world when they decide to launch it in the new year. Stay tuned!